Artificial intelligence (AI) and its ethics are widely discussed in academia and the media. Recent discussions have highlighted threats associated with generative AI (Hagendorff 2024). However, it is important to recognize that established AI technologies also require careful consideration of their ethical implications (Davinder, Suleyman, Kaley, Durresi 2022). This article showcases the ENACT and MANOLO projects, exemplifying efforts to advance ethical and trustworthy AI. This report uses ChatGPT to edit the language and improve the fluency of the text.

Photo by TonikTech / Adobe Stock (Laurea Education-licence)

Photo by TonikTech / Adobe Stock (Laurea Education-licence)

AI and the development of new services and applications

Using machines for decision-making and executing simple tasks is not a recent develop-ment. However, the efficiency and complexity of these systems have significantly increased due to the abundance of data, sophisticated algorithms, and powerful computing capabili-ties. (Davinder et al 2022) These advancements are closely tied to the field of artificial intel-ligence. The increasing adoption of Artificial Intelligence (AI) has revolutionized numerous sectors, unlocking new possibilities, and boosting economic development. However, the energy demands and data needs for training AI systems are becoming unsustainable, and their environmental impact is significant (Strubell, Ganesh, McCallum 2019). Additionally, user trust seems to be a challenge (Rongbign & Wobowo 2022; Davinder et al. 2022). Bi-ased Hiring Algorithms, Surveillance and Privacy Violations and Unfair Credit Scorings are examples of unfair solutions. Examples of AI solutions having a positive impact on individu-als and society – if they have been designed and trained well- are, in turn, healthcare diag-nostics, disaster response, and automating stressful workloads.

To fully harness the advantages of AI while mitigating its potential risks, the trustworthiness of the AI is essential value. It ensures that AI technologies uphold fundamental human rights and operate transparently for the public good. Recognizing this, the European Commission established the High-Level Expert Group on Artificial Intelligence (AI HLEG) in 2019 to define and promote Trustworthy AI across the European Union. These Ethical Guidelines for Trustworthy AI guidelines are also the background of the new EU Artificial Intelligence Regulation (AI Act 2024). The guidelines aim to foster AI that is lawful, ethical, and robust, and they outline seven key requirements: Human agency and oversight, Technical robustness and safety, Privacy and data governance, Transparency, Diversity, non-discrimination and fairness, Societal and environmental well-being, and Accountability (AI HLEG 2019).

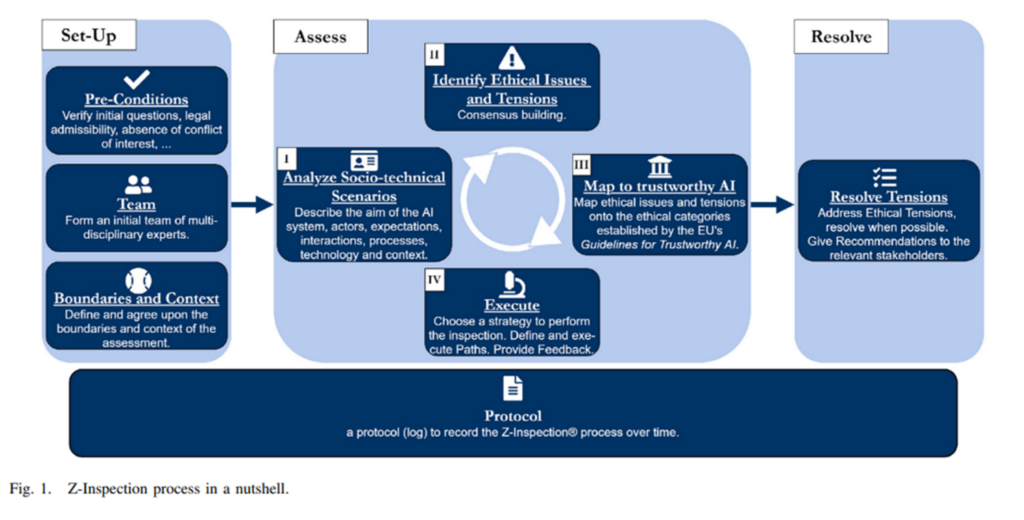

Laurea is currently involved in the ethical work of two Horizon Europe Research and Innovation Actions (RIA) developing and applying AI, namely the MANOLO and ENACT projects. LAUREA is also involved in the Z-Inspection® Initiative to assess the trustworthiness of AI. The Z-Inspection process is based on the EU Ethical Guidelines for Trustworthy AI. The approach emphasizes co-creation, including understanding the context and specific requirements for trustworthy AI (Zicari et al. 2021). This approach is applied in the stated projects. See the description of the Z-inspection process in a nutshell below.

Figure 1: Z-inspection ® process in a nutshell (source: Zikari et al. 2021)

Figure 1: Z-inspection ® process in a nutshell (source: Zikari et al. 2021)

MANOLO Project and Ethics of AI

The MANOLO Horizon Europe project coordinated by Ireland’s National Centre for Applied Ai (CeDAR) aims to provide a stack of trustworthy AI algorithms for the cloud-edge continuum. The AI algorithms and tools will help AI systems achieve better energy efficiency and seamless optimization of their operations, resources and data needed to train, deploy and operate high-quality, lightweight AI models in centralized and cloud-based distributed environments. The MANOLO toolkit will be tested and validated in the following use cases: Service robots in assisted living, Service robots in agile manufacturing, Wearable devices to improve patients’ sleep and memory, and Mobile applications for data handling with facial recognition and image classification functions.(MANOLO GA 2023).

The ethics-driven Z-inspection® process (Zicari et al. 2021) enables the trustworthy assessment of AI systems and applies to all phases of an AI system development cycle. The process begins with initiating a co-design and co-creation activity that maps ethical and legal perspectives together with stakeholders, culminating in creating socio-technical scenarios for each use case. Based on these socio-technical scenarios, ethical tensions are identified, addressed and monitored throughout the development cycle. Additionally, use case providers can present their claims and arguments for the trustworthiness of an AI system. These claims and arguments are continuously monitored and verified during the final AI trustworthy assessment. The outputs from this initial phase are considered when developing the AI system and its organizational processes. Finally, the overall MANOLO toolset is evaluated and validated by all stakeholders, including end users. This phase marks the end of the Z-inspection® process. Laurea will be responsible for the evaluation and validation work.

It is also noteworthy that MANOLO’s goal of developing energy-efficient AI algorithms are already linked to one of the ethical requirements of trustworthy AI, which is socio-economic and environmental well-being. The guidelines (AI HLEG 2019, 8) state that ”AI systems should benefit all people, including future generations. Therefore, it must be ensured that they are sustainable and environmentally friendly.” MANOLO’s solutions will be, therefore, tools for future AI solution developers to attain societal and environmental well-being as part of trustworthy AI requirements.

ENACT project & AI ethics

ENACT Horizon Europe project has two main goals: To create a model that assesses the risk of hospitalization due to various diseases based on environmental exposures and to predict the risk of developing early stages of these diseases at an individual level. To achieve this, ENACT will gather detailed environmental data and develop AI tools to help monitor and prevent diseases. The project will build a secure platform using advanced technologies to share data across borders, allowing for better AI training and analysis with vast datasets. The platform will offer services to help citizens, city planners, healthcare providers, and policymakers with disease prevention and management. This will support both clinical care and policy-making, aiming to reduce the burden of diseases and healthcare costs. (ENACT GA 2024).

Like in MANOLO, the Z-Inspection® process will be applied during the project’s lifespan. In the ENACT project, LAUREA is responsible for the Ethics and Data Management of ENACT, covering the implementation of the Z-inspection® in the project’s design, implementation, and validation phases. LAUREA aims to further develop tools related to the co-creation of sociotechnical scenarios with the help of service design in a way that makes both the data collection, analysis and final outcomes easier to handle.

Conclusion

The AI ethics work in MANOLO and ENACT projects highlights the increasing need to involve Social Sciences and Humanities (SSH) disciplines and ethics expertise in the development of services and products utilizing rapidly evolving AI. Promoting the sustainable role of technology in shaping the future as part of Laurea’s strategy (Laurea 2024) is strongly present in MANOLO and ENACT projects.

References

- Davinder K., Suleyman U., Rittichier, K., Durresi, A. 2022. Trustworthy Artificial Intelligence: A Review. AMC Computing Surveys (CSUR), Volume 55, Issue 2 .Article no 39, 1-38 https://doi.org/10.1145/3491209

- ENACT Grant Agreement (ENACT GA). 2024.

- EU (2024) Regulation (EU) 2024/1689 of the European Parliament and of the Council of 13 June 2024 laying down harmonised rules on artificial intelligence and amending Regulations (EC) No 300/2008, (EU) No 167/2013, (EU) No 168/2013, (EU) 2018/858, (EU) 2018/1139 and (EU) 2019/2144 and Directives 2014/90/EU, (EU) 2016/797 and (EU) 2020/1828 (Artificial Intelligence Act) (Text with EEA relevance) http://data.europa.eu/eli/reg/2024/1689/oj

- Hagendorff T. 2024. Mapping the Ethics of Generative AI: A Comprehensive Scoping Review. Minds and Machines (2024) 34:39 https://doi.org/10.1007/s11023-024-09694-w

- High-Level Expert Group on Artificial Intelligence (AI HLEG). 2019. Ethics guidelines for trustworthy AI European Commission. Accessed 11.2.2025.

- Laurea 2024. Laurea Strategy 2030. strategia-2030_eng-lowres.pdf Accessed 11.2.2023.

- MANOLO Grant Agreement (MANOLO GA) 2023.

- Strubell E., Ganesh A., McCallum A. 2019. Energy and Policy Considerations for Deep Learning in NLP. Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, pages 3645–3650, Florence Italy, Association for Computational Linguistics.

- Rongbin Y. & Wibowo, S. 2022. User trust in artificial intelligence: A comprehensive conceptual framework. Electronic Markets 2, 2053–2077 https://doi.org/10.1007/s12525-022-00592-6

- Zicari, R.V., Brodersen, J., Brusseau, J., Düdder, B., Eichhorn, T., Ivanov, T., Kararigas, G., Kringen, P., McCullough, M., Möslein, F., Mushtaq, N. 2021. Z-Inspection®: a process to assess trustworthy AI. IEEE Transactions on Technology and Society, 2(2), pp.83-97.

This report uses ChatGPT to edit the language and improve the fluency of the text.

The first image in this article (the feature image) is not licensed under CC BY-SA. It has been used in accordance with the terms of the Adobe Stock Education License and may not be reused or redistributed without separate permission.